Generative AI has moved beyond simple chatbots. The new frontier is building sophisticated AI agents that can reason, use tools, and execute complex, multi-step tasks. But how do you evolve from a single-purpose AI assistant to a robust, multi-agent system that can act as a true digital coworker?

This is a journey from simple automation to genuine augmentation. It requires a shift in thinking and a new architectural blueprint. After extensive research and development, we’ve created a pragmatic approach to building these systems. This article shares that blueprint.

Our Starting Point: The Limits of a Single-Agent System

Many organisations get started with generative AI in a similar fashion. They choose an LLM, perhaps connect to a vector store to enable RAG. This is an impressive start!

Iterations hopefully begin after small signs of success. Perhaps you start iterating with prompts, loading it with context or even swapping out prompts based on upstream predictions.

At this stage, we a contextualized single-agent system. The core AI “brain” is the same; only its instructions changed. This model, while useful, has inherent limitations:

- It’s Brittle: It relies on rigid, upfront classification. If a request doesn’t fit a category or spans multiple topics, it fails.

- It Doesn’t Scale: Adding a new capability requires re-engineering the entire complex prompt and routing logic.

- It Lacks Agency: It cannot handle ambiguous, multi-step problems that require autonomous reasoning.

Pressure from C-suite and boards keep mounting. So let’s look at how we can go from a single-agent system, to an empowered multi-agent system.

The Building Blocks of an Agentic System

Clear definitions are critical. In the blueprint, the system is composed of three distinct components:

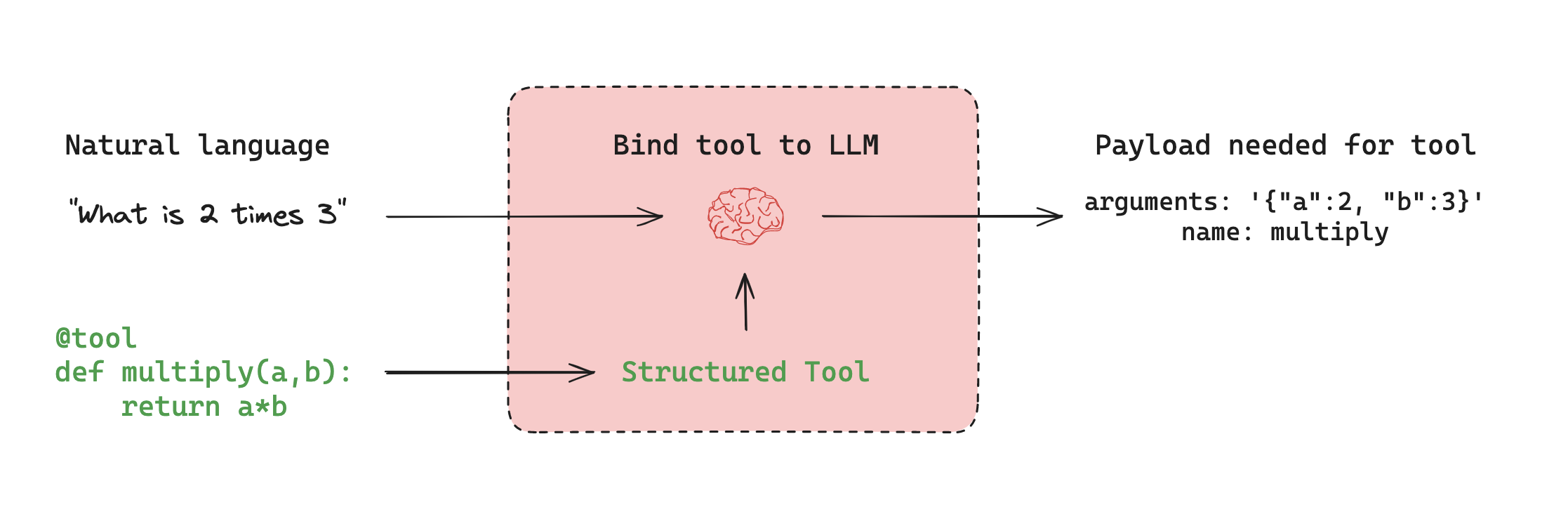

- Tools: A Tool is a single-purpose function that the AI can call to interact with the outside world. They should be deterministic, reliable, and reusable.

- Example:

check_subscription_status(user_id)orissue_refund(amount, user_id).

- Example:

- Workflows: A Workflow is a predefined, scripted sequence of steps, often involving multiple tool calls. They are designed for high-predictability, high-stakes processes where deviation is not an option.

- Agents: An Agent is an autonomous entity powered by a Large Language Model (LLM). Its defining characteristic is its ability to reason. It can understand a goal, plan a sequence of steps, and decide which Tools to use to achieve it.

Workflows execute a script; Agents make decisions. This distinction led us to our most important guiding principle.

The Predictability vs. Agency Spectrum

Not all business processes are created equal. When designing an AI solution, you must decide where it needs to fall on a critical spectrum:

- High Predictability (Workflows): These are for processes that must be executed the same way every time. They are highly testable, reliable, and safe. The logic is deterministic, even if it’s triggered by an AI’s intent detection.

- High Agency (Agents): These are for complex, ambiguous problems that require dynamic problem-solving, like diagnosing a novel technical issue. These agents often use a reasoning framework (like ReAct - Reason and Act) to iteratively use tools and reflect on the results.

Choosing where a task falls on this spectrum is the most important initial design decision. This is a concept described by LangGraph.

A Case Study: Automating Cancellations

To make this tangible, let’s walk through automating an organization’s cancellation request. Since this is a high-stakes “write” action, we placed it on the High Predictability end of the spectrum and designed it as a deterministic workflow.

Intent Detection: First, a generative AI model analyses the incoming user email to detect the intent to cancel. This is the primary AI reasoning step and the riskiest part of the process.

Workflow Trigger: Upon detecting the intent with high confidence, the AI calls a single tool that triggers the cancellation_workflow.

Deterministic Execution: From here, the workflow executes a rigid, pre-scripted set of steps, calling on a library of reusable tools:

- Tool:

check_user_permissions(user_id, org_id) - Tool:

validate_subscription_status(org_id) - Tool:

schedule_cancellation(org_id, effective_date) - Tool:

trigger_win_back_offer(user_id) - Tool:

notify_user(user_id, template='cancellation_scheduled')

- Tool:

Notice that aside from the initial intent detection, the core process is entirely deterministic and script-driven, ensuring it is safe and auditable.

The Architectural Leap: The Supervisor-Agent Model

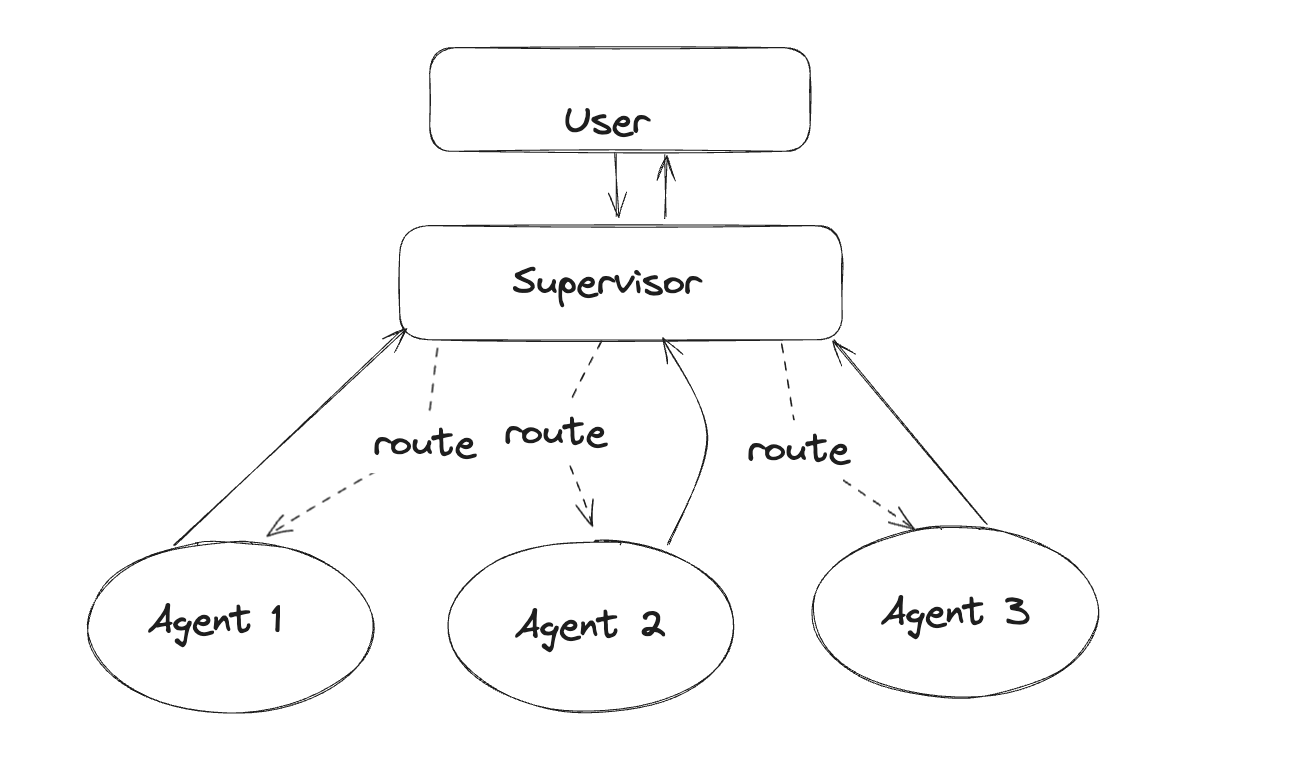

To overcome the limits of our initial single-agent system, we refer to a architecture modeled on how expert teams work: a supervisor orchestrating a team of specialists.

This Supervisor Model is a multi-agent architecture that functions like microservices for AI:

- The Supervisor Agent: This is a master AI router. Its only job is to analyze an incoming request and delegate it to the appropriate specialist agent or workflow. It’s the “manager” of the team.

- Specialist Agents: Each agent is an expert in a specific domain (e.g., BillingAgent, TechnicalSupportAgent). They have their own small set of highly relevant tools.

- The “Compiler”: For complex requests that require multiple skills, the Supervisor can delegate to multiple agents and then “compile” their responses into a single, coherent answer for the user.

This architecture is scalable and resilient. We can add or update a specialist agent without having to reconfigure the entire system—we simply update the Supervisor on the new capability.

Compare this to a more simple architectre like the one below, the supervisor is a ‘fatter’ layer of the overal solution. The diagram below illustrates an example where the supervisor’s primary role is to route requests to a relevant agent or tool. This is in an excellent starting point and perhaps more suited to applications where actions are more important than interactions.

The Unseen Foundation: Guardrails and Evaluation

Finally, none of this is possible without a deep commitment to safety and reliability. This rests on two pillars:

- Guardrails: These are real-time safety checks. They scan user input for malicious prompts, redact sensitive PII before it’s sent to an LLM, and filter the AI’s output for harmful content. They are the AI’s non-negotiable safety system.

- Evaluation: This is the rigorous, continuous testing of the system’s performance. We maintain a “golden dataset” of test cases to evaluate every change against, ensuring accuracy never degrades.

For any high-stakes process, a robust evaluation framework is not just a best practice; it is a core requirement for building trust and ensuring enterprise-readiness. Building this foundation gives us the confidence to innovate faster and more safely.

The journey from a simple AI assistant to a team of collaborative digital coworkers is an architectural and philosophical one. By focusing on a clear vocabulary, embracing the predictability-agency spectrum, and building on a scalable Supervisor model, we can create AI systems that are not just powerful, but also reliable, safe, and truly helpful.